Strategic classification with randomised classifiers

Abstract

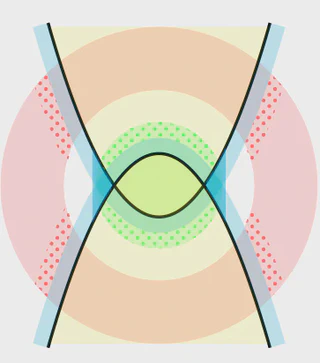

We consider the problem of strategic classification, where a learner must build a model to classify agents based on features that have been strategically modified. Previous work in this area has concentrated on the case when the learner is restricted to deterministic classifiers. In contrast, we perform a theoretical analysis of an extension to this setting that allows the learner to produce a randomised classifier. We show that, under certain conditions, the optimal randomised classifier can achieve better accuracy than the optimal deterministic classifier, but under no conditions can it be worse. When a finite set of training data is available, we show that the excess risk of Strategic Empirical Risk Minimisation over the class of randomised classifiers is bounded in a similar manner as the deterministic case. In both the deterministic and randomised cases, the risk of the classifier produced by the learner converges to that of the corresponding optimal classifier as the volume of available training data grows. Moreover, this convergence happens at the same rate as in the i.i.d. case. Our findings are compared with previous theoretical work analysing the problem of strategic classification. We conclude that randomisation has the potential to alleviate some issues that could be faced in practice without introducing any substantial downsides.

Type

This work is driven by the results in my previous paper on LLMs.

Note

Create your slides in Markdown - click the Slides button to check out the example.

Add the publication’s full text or supplementary notes here. You can use rich formatting such as including code, math, and images.